Page 45 - Chip Scale Review_May June_2023-digital

P. 45

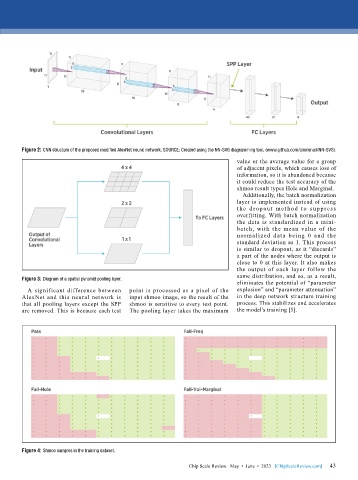

Figure 2: CNN structure of the proposed modified AlexNet neural network. SOURCE: Created using the NN-SVG diagramming tool, (www.github.com/alexlenail/NN-SVG).

value or the average value for a group

of adjacent pixels, which causes loss of

information, so it is abandoned because

it could reduce the test accuracy of the

shmoo result types Hole and Marginal.

Additionally, the batch normalization

layer is implemented instead of using

t he d ropout met hod to suppress

overfitting. With batch normalization

the data is standardized in a mini-

batch, with the mean value of the

nor malized data being 0 and the

standard deviation as 1. This process

is similar to dropout, as it “discards”

a part of the nodes where the output is

close to 0 at this layer. It also makes

the output of each layer follow the

same distribution, and so, as a result,

Figure 3: Diagram of a spatial pyramid pooling layer.

eliminates the potential of “parameter

A significant difference between point is processed as a pixel of the explosion” and “parameter attenuation”

AlexNet and this neural network is input shmoo image, so the result of the in the deep network structure training

that all pooling layers except the SPP shmoo is sensitive to every test point. process. This stabilizes and accelerates

are removed. This is because each test The pooling layer takes the maximum the model’s training [5].

Figure 4: Shmoo samples in the training dataset.

43

Chip Scale Review May • June • 2023 [ChipScaleReview.com] 43